|

|

|

filthy

SFN Die Hard

USA

14408 Posts |

Posted - 01/03/2011 : 14:38:46 [Permalink] Posted - 01/03/2011 : 14:38:46 [Permalink]

|

I'd just like to toss in that ants do indeed hold conversations. They do it through chemical pheromones and they are very exact. So too, are honey bees and other communal insects.

Is anyone else actually reading these long, tedious posts?

|

"What luck for rulers that men do not think." -- Adolf Hitler (1889 - 1945)

"If only we could impeach on the basis of criminal stupidity, 90% of the Rethuglicans and half of the Democrats would be thrown out of office." ~~ P.Z. Myres

"The default position of human nature is to punch the other guy in the face and take his stuff." ~~ Dude

Brother Boot Knife of Warm Humanitarianism,

and Crypto-Communist!

|

|

|

|

JerryB

Skeptic Friend

279 Posts |

Posted - 01/03/2011 : 15:14:14 [Permalink] Posted - 01/03/2011 : 15:14:14 [Permalink]

|

Originally posted by Dave W.

No, that paper is fine. But it's not evidence of what you claimed was a "theory of ID." Shannon's Information Theory is not a "theory of ID." |

LOL....I never stated that or anything you could possibly construe to mean that-----STRAWMAN ALERT!

| I'd expect to find a refutation of modern evolutionary theory. |

Why, since the bulk of us are evolutionists?

| There are lots more, too. |

Let's hear them.

| So you are saying that the location of the "bad" genes don't matter to your entropy calculation. A mutation leading to cystic fibrosis is exactly as "disordering" as a mutation leading to brittle nails? |

No, I said exactly the opposite and you know I did. You asked me why S could not be used to determine when mutational meltdown would come upon a population and I replied because it cannot determine the severity of the mutation.

| I have been showing you why you're wrong, and you evaded the discussion by claiming that I'm criticizing the scientists. You've avoided engaging with most of my criticisms. |

Bull, I have addressed them all and you know it. You simply blow off my responses with one liners.

| Schrödinger never did anything with the formula. He said that entropy is proportional to the log of the disorder, D, in a cell (including the disorder due to heat, and due to different molecules being mixed together - he didn't include genetic mutations in his examples), and talks about how cells avoid entropic death. He never once put a number in for D. He never once actually calculated the entropy of any cell (but if he had, he would have insisted - as he does in the book - that the units on the entropy are calories per °ree;C, and that those units are important to understanding what he's saying). |

OK, so what? He showed you how to calculate it and it is most correct to use any energy/temp. Now I'm not going to get into an is/too is/not argument with you on this one, just please take a high school chem course.

| So you're challenging me to email or phone a scientist and ask him/her to agree with me that you're wrong? Why would any scientist spend time on your nonsense? I mean, there are lots of scientists who wrote highly critical reviews of Tipler's nonsense (Stenger's, for example), but you, Jerry, are no Frank Tipler. |

I'm sorry, would you cut and paste the part where I claimed to be Frank Tipler? LOL..

| Schrödinger was absolutely correct that living cells are open systems which are essentially generators of "negative entropy" (or "ordering"). Since you deny Schrödinger's main point, and you claim he's right, you must be wrong. |

What? BAHAHAhahahah........Now that is funny. I don't claim him to be wrong.....I used him as a reference.....WHEW.....are you getting frustrated? LOL

| You just want to get away from having to defend your choice of using two numbers that you basically pulled out of the air. |

No, I want to get away from having to say I used their figures, 1.6 deleterious mutations over a period of about 6 million years and you coming back with a juvenile 'did not' 15,000 more times.

| Why is Darwinism, which insists on only natural processes, "poof?" |

Because you have no evidence for those magical auras that seem to poof amoebas into Arnold Swartzenegger. Just trust us, it happened.

| I've seen no evidence that anything unnatural happened during the Cambrian Explosion. |

You've seen no evidence that anything happened during the CE other than organisms appearing in the record fully formed and ready to go in their environment and staying that way until they disappear from the record.

That was either design or a bunch of well organized Darwinian poofs.

My argument is that your argument's conclusion is that the numbers you fed into your calculation are garbage, thus invalidating your calculation and your argument. I assume nothing in making that argument.

|

Sure you did. If you take the position that the numbers are garbage, you are denying man's walk from a common ancestor as well. However, if you assume the numbers are accurate and you DO believe that man and Chimp shared a common ancestor, then the math walks.

Either way you lose the debate. Darwinism is a crock. |

|

|

|

JerryB

Skeptic Friend

279 Posts |

Posted - 01/03/2011 : 15:15:51 [Permalink] Posted - 01/03/2011 : 15:15:51 [Permalink]

|

Originally posted by filthy

I'd just like to toss in that ants do indeed hold conversations. They do it through chemical pheromones and they are very exact. So too, are honey bees and other communal insects.

Is anyone else actually reading these long, tedious posts?

|

Probably not.....LOL |

|

|

|

R.Wreck

SFN Regular

USA

1191 Posts |

Posted - 01/03/2011 : 15:29:09 [Permalink] Posted - 01/03/2011 : 15:29:09 [Permalink]

|

Originally posted by JerryB

Originally posted by R.Wreck

| That is exactly what we are calculating in the human genome when we note an entropic rise: an information loss. |

Are you sure about that?

Information and Entropy

Now that I've got a bit of background and origins done, it's time to get to some of the fun stuff.

As I said yesterday, both of the big branches of information theory have a concept of entropy. While the exact presentations differ, because they're built on somewhat different theoretical foundations, the basic meaning of entropy in both branches is pretty much the same. I'm going to stick with the terminology of the Kolmogorov-Chaitin branch, but the basic idea is the same in either.

In K-C theory, we talk about the information content of strings. A string is an ordered sequence of characters from a fixed alphabet. It doesn't really matter what alphabet you choose; the point of this kind of theory isn't to come up with a specific number describing the complexity of a string, but to be able to talk about what information means in a formal algorithmic sense.

The K-C definition of the entropy of a string is the total quantity of information encoded in that string.

Here's where it gets fun.

Another way of saying exactly the same thing is that the entropy of a string is a measure of the randomness of the string.

Structured strings are structured precisely because they contain redundancy - and redundancy does not add information.

Which string has more information: "XXXXYYYYY" or "4X5Y"? They have the same amount. One is a lot smaller than the other. But they contain the same amount of information.

Here's a third way of saying exactly the same thing: the entropy of a string is the length of the smallest compressed string that can be decompressed into the original.

Here's a better example. Go back and look at the first two paragraphs of yesterday's post. It took 514 characters.

Here's the same information, compressed (using gzip) and then made readable using a unix utility called uuencode:

M'XL(")E8$$0``VIU;FL`19$Q=JPP#$7[6#8804;7KIN',`M+]#8804;HHLPH``)\8BMOPY

M[#Y/GCDG#

MLY2EI8$9H5GLX=*R(_+ZP/,-5-1#\HRJNT`77@LL,MZD,"H7LSUKDW3@$#V2

MH(KTO$Z^$%CN1Z>3L*J^?6ZW?^Y2+10;\+SOO'OC"/;7T5QA%987SY02I3I$

MLKW"W,VZ-(J$E"[$;'2KYI^\-_L./3BW.+WF3XE8)#8804;@D8X^U59DQ7IA*F+X/

MM?I!RJ*%FE%])Z+EXE+LSN*,P$YNX5/P,OCVG;IK=5_K&CK6J7%'+5M,R&J]

M95*W6O5EI%G^K)8B/XV#L=:5_`=5ELP#Y#\UJ??>[[DI=J''*2D];K_F230"

$`@(`````

`

That's only 465 characters; and if I didn't have to encode it to stop it from crashing your web-browser, it would only have been 319 characters. Those characters are a lot more random than the original - so they have a higher information density. The closer we get to the shortest possible compressed length, the higher the information density gets.

Actually, I'm lying slightly; there's an additional factor that needs to be considered in K-C theory.

Remember that K-C comes from the foundational mathematics of computer science, something called recursive function theory. We're talking about strings being processed algorithmically by a program. So really, we get to talk about programs that generate strings. So it's not just strings: you have a program and a data string. Really, you measure information content of a string as the total size of the smallest pairing of program and data that generates that string. But for most purposes, that doesn't make that much difference: the combination of the program and the data can be encoded into a string.

In the two examples above, the program to follow is implied by the contents of the string; if we wanted to really model it precisely, we'd need to include the programs:

*

For the string "XXXXYYYYY", the program is roughly:

while there are more characters in the data:

read a character

output the character that you read

end

*

For the string "4X5Y", the program is roughly:

while there are more characters in the data:

read a number n from the data

read a character c from the data

output c n times

end

Now, specifics aside: the definitions I gave earlier are pretty much correct in both K-C and Shannon information theory: information content is proportional to randomness is proportional to minimum compressed length.

So - what happens if I take a string in very non-compressed format (like, say, an uncompressed digitized voice) and send it over a noisy phone line? Am I gaining information, or losing information?

The answer is: gaining information. Introducing randomness into the string is adding information.

"AAAABBBB" contains less information than "AAAABxBB".

The way this is used to refute bozos who claim things like "You can't create information" should be obvious. |

|

Of course, we haven't really been discussing Shannon Entropy in this discussion, but I guess it is applicable.

And I would simply beg to differ with this blogger (You did know that this is blog and not a published paper?)

In any case, he states in his writings what Shannon posited:

"Shannon called the information content of a signal it's entropy, because he saw a similarity between his information entropy and thermodynamic entropy: in a communicating system, entropy never decreases: it increases until the capacity of the channel is reached, and then it stays content."

I'm afraid that is the way it is.

|

Way to miss the point. Again.

Simply put, you claimed that a rise in entropy = a loss of information.

| That is exactly what we are calculating in the human genome when we note an entropic rise: an information loss. |

That is ass-backward. So which is it? Is the genome increasing in entropy or losing information?

|

The foundation of morality is to . . . give up pretending to believe that for which there is no evidence, and repeating unintelligible propositions about things beyond the possibliities of knowledge.

T. H. Huxley

The Cattle Prod of Enlightened Compassion

|

|

|

|

R.Wreck

SFN Regular

USA

1191 Posts |

Posted - 01/03/2011 : 16:02:56 [Permalink] Posted - 01/03/2011 : 16:02:56 [Permalink]

|

Originally posted by JerryB

Originally posted by Kil

Oh please. Don't play dumb Jerry. (I'm giving you the benefit of the doubt. Did you see that?) It's common to call any introductory class in almost any subject, "Whatever the class is-101." Even in schools it's a common reference even if it's not the official name of the intro class. "Math 101" or "English 101" or "evolution 101," etcetera... |

In case people are curious, I will elaborate. I'm afraid that the class numbering system in colleges is very well structured and numbered so that students and parents will know what they are before the student is enrolled.

For example, physics 100 is a 1st year course for non-science majors. It's designed to be easy, little math, etc. and people can take that just because they may be curious about physics or their world around them. A 100 course is just an elective.

Physics 101 is much more difficult and is designed for science (or related) majors or minors.

Other courses are numbered for certain purposes. For example, myself--As an environmental chemistry major and biology minor I started at chem 110, a course for chemistry (or related) majors and biology 101 since that was my minor.

second year courses begin with 2, 3rd year 3...etc.

College level evolution is normally taught in Genetics and Evolution 205, a 2nd year class for science majors. However, some institutions may differ with another specialty science such as microbiology as 205 and G&E as another.

But my point is that Evolution cannot be taught without 1st year prerequisite classes (such as bio 101) or no one wold understand it (that is one intense course) and that is why is is a 200 level course.

|

You really don't understand what "[fill in the blank] 101" means in the vernacular? Are you really that clueless? |

The foundation of morality is to . . . give up pretending to believe that for which there is no evidence, and repeating unintelligible propositions about things beyond the possibliities of knowledge.

T. H. Huxley

The Cattle Prod of Enlightened Compassion

|

|

|

|

Dave W.

Info Junkie

USA

26036 Posts |

Posted - 01/03/2011 : 16:09:56 [Permalink] Posted - 01/03/2011 : 16:09:56 [Permalink]

|

Originally posted by JerryB

| But Schrödinger is very clear that the result is in units of calories per degree Centigrade. |

Who cares? Have you had a chemistry course in your life? It doesn't matter if the constant is expressed in joules/kelvin or calories/centigrade. As long as the math is consistent in those values throughout the calculations you are going to get the same result.

1 calorie = 4.18400 joules,

Convert Celsius to Kelvin here:

http://www.metric-conversions.org/temperature/celsius-to-kelvin.htm |

This supports your contention that we can ignore the units on your entropy calculation how, exactly?| My whole point is that we both used Boltzmann's constant. Now you are saying that the only thing we did in common was to us the B constant. YES> Now you get get it......that was the point. |

But nobody cares about that.| And we both used it to show statistical mechanics, him using atoms, me using genes. |

But your argument concludes that the input values you use are invalid. Sure, you used the same equation, but your input was garbage. Can I put the circumference and radius of a tire into the Boltzmann formula and learn the "linear entropy" of the wheels on my car? Of course not.| 51% is all it takes to show a trend toward degradation. |

Not when James Crow provides the answer, too.| If only 51% is all he meant, then that is enough to show an entropic increase. |

Not if there are anti-entropic processes involved, which Schrödinger claimed there are.| Read the part I posted that is important: "the overall impact of the mutation process must be deleterious" Right. Thanks, James, that's all I needed to prove my point. |

Except that James Crow disagrees with your point.| The genome hasn't ordered in 6 million years and there is NO evidence to show it did before that. Darwinism is a fairytale for grownups. |

Except that you haven't supported the idea that "deleterious mutation" is a synonym for "disorder," you've just assumed that to be true.| You asked for a reference to show that mutations were mostly deleterious. Actually, you asked for one to back up my opinion that beneficial mutations would have been such a small minority as to not affect the study, however, since I clearly clarified by inserting (IMHO) that would not need a reference. |

Opinions are things thought to be true, but not to such a large extent as a "fact." As such, it's perfectly acceptable to ask for evidence supporting an opinion. You don't hold any opinions you think are false, do you?| I provided you the Crow reference. |

Which does nothing to help quantify the entropy of any genome, nor did it support your contention that beneficial mutations are so rare that they could be ignored in your entropy calculation.| But I previously asked you to provide me a reference that beneficial mutations could have been prevalent and you did not. |

I would never claim that beneficial mutations could have been prevalent, because they're not, so I don't know why you would ask me to support an opinion I disagree with.| No, he quite plainly states that metabolic functions give a cell more energy than it turns into entropy. From a thermodynamic viewpoint, cells are open systems, exchanging both matter and energy with their environment. There's no reason to think that entropy can only rise in such a system. |

Nor would I, or did I ever state such a silly thing. Entropy can rise or fall in an open system. You have to calculate it to know what it's doing. I did. It's rising in the genome. |

No matter how many times you repeat this nonsense, it'll still be nonsense.

Here are more purely mathematical problems with the equations you've chosen to use:

Since the numerator is always 41471!, W can only vary with the proportion of deleterious mutations to non-deleterious mutations, and will never be below 1.000. The only way for it to get down to 1.000 is if the researchers had found precisely zero deleterious mutations, or if they'd found 41471 of them (because in either case, one of the two factorials in the denominator would be 1, and the whole denominator would then be equal to the numerator). And since W can't be below 1.000, S can never be negative, no matter what the researchers had found, so long as you insist that N1 plus N2 equals some constant (in this case, 41471), because factorials of negative numbers are undefined. Thus, you have ensured that your calculated result will always confirm your hypothesis, regardless of the actual numbers used, since nobody will ever claim that there are no deleterious mutations.

If the researchers had found only 0.00001 deleterious mutations per generation, your entropy would be calculated as 6.72×10-28, or about five orders of magnitude smaller than your number, but you'd still be saying "entropy is increasing." If the researchers had found that half of the nucleotides were deleterious mutations, your calculation results in 1.28×10-18 (five orders of magnitude larger than your result), and you'd still be saying "entropy is increasing."

But the really funny part is that if the researchers had found 41469.6 deleterious mutations per generation, your calculation would result in the exact same "entropy" it does at 1.6 deleterious mutations.

The way you're calculating it, the entropy is maximized at 50% bad mutations, and zero at both 0% and 100%. Fancy that! A genome with 100% deleterious mutations has zero entropy! A genome that's 99.9961% bad mutations has the same entropy as one with 0.0039% (1.6) bad mutations!

More later... |

- Dave W. (Private Msg, EMail)

Evidently, I rock!

Why not question something for a change?

Visit Dave's Psoriasis Info, too. |

|

|

|

JerryB

Skeptic Friend

279 Posts |

Posted - 01/03/2011 : 16:11:40 [Permalink] Posted - 01/03/2011 : 16:11:40 [Permalink]

|

Originally posted by R.Wreck

Way to miss the point. Again.

Simply put, you claimed that a rise in entropy = a loss of information.

|

Yes, and herein lies one of the major areas of confusion in thermodynamics (even many scientists I have debated are mixed up in this area). We can't just start mixing entropies and expect for it to be meaningful. There are probably a dozen different entropies I can think of that are used to study different systems. All of them legit and useful in their own, very specific situations.

Dave and I have been debating Boltzmann entropy (or Feynmann if he don't like the energy/temp in the equation, and he can't decide if he does or doesn't)

It was Boltzmann who noted that his entropy is the opposite of information. When there is more entropy in a system, there is less information and vice versa.

That's why I cautioned you that we were not discussing Shannon entropy. Get these entropies confused and YOU will be confused.

Boltzmann entropy is a simple formula S = K logW where K is a constant, W is a statistical weight, or the number of ways that things (like genes or atoms) can be arranged and S is the entropy.

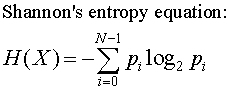

Shannon entropy is:

And you are going to come out in bits which is similar, but not the same. We have to be careful to keep our entropies straight.

That is ass-backward. So which is it? Is the genome increasing in entropy or losing information?

|

Both. It is not ass-backward. If entropy is the opposite of information, then when entropy goes up, information goes down.

So, I calculated entropy going up in the genome, that means information went down.

Are you with me? |

|

|

|

Dr. Mabuse

Septic Fiend

Sweden

9700 Posts |

Posted - 01/03/2011 : 16:17:42 [Permalink] Posted - 01/03/2011 : 16:17:42 [Permalink]

|

Originally posted by filthy

Is anyone else actually reading these long, tedious posts?

|

I am.

It's both as hilarious, and as frustrating, as a trip to the funny farm.

|

Dr. Mabuse - "When the going gets tough, the tough get Duct-tape..."

Dr. Mabuse whisper.mp3

"Equivocation is not just a job, for a creationist it's a way of life..." Dr. Mabuse

Support American Troops in Iraq:

Send them unarmed civilians for target practice..

Collateralmurder. |

|

|

|

Dave W.

Info Junkie

USA

26036 Posts |

Posted - 01/03/2011 : 16:18:53 [Permalink] Posted - 01/03/2011 : 16:18:53 [Permalink]

|

Originally posted by JerryB

If you take the position that the numbers are garbage... |

I don't.| However, if you assume the numbers are accurate... |

I don't.

My argument doesn't use the numbers as a premise at all. The numbers are irrelevant to my argument. The accuracy of the numbers is irrelevant to my argument.

The accuracy of those numbers is a premise to your argument, which concludes that the numbers are garbage. Thus, your argument refutes itself.| Either way you lose the debate. |

I lose the debate because you created a false dilemma. How infantile of you. |

- Dave W. (Private Msg, EMail)

Evidently, I rock!

Why not question something for a change?

Visit Dave's Psoriasis Info, too. |

|

|

|

podcat

Skeptic Friend

435 Posts |

Posted - 01/03/2011 : 16:36:52 [Permalink]

|

| I'm reading them. Actually, JerryB lies very well. |

“In a modern...society, everybody has the absolute right to believe whatever they damn well please, but they don't have the same right to be taken seriously”.

-Barry Williams, co-founder, Australian Skeptics |

|

|

|

JerryB

Skeptic Friend

279 Posts |

Posted - 01/03/2011 : 17:15:59 [Permalink] Posted - 01/03/2011 : 17:15:59 [Permalink]

|

Originally posted by Dave W.

This supports your contention that we can ignore the units on your entropy calculation how, exactly? |

Quit trying to play dumb and pretend that I did not also calculate this using Feynman's method which is pure statistical mechanics that contains no energy/temp. Anyone reading in here can tell you are just being disingenuous.

Go with Feynman's math if you don't like Boltzmann's. Either way you lose.

In fact, you are losing this entire debate badly and you are beginning to sense it. Good.

| But nobody cares about that. |

Of course you don't care about that. But that was my point, you are simply trying to obfuscate it into extinction. You won't get it done. This is not my first rodeo with you people educated on talk origins.

| But your argument concludes that the input values you use are invalid. Sure, you used the same equation, but your input was garbage. Can I put the circumference and radius of a tire into the Boltzmann formula and learn the "linear entropy" of the wheels on my car? Of course not. |

How silly is that...LOL. The input values I used came from a peer reviewed paper on your side. Have I yet sent you to a creationist web site? No, now you seem to be arguing that your own evolutionary biologists are as full of crap as a Christmas turkey. So my input was your input. Dissing your own people....WOW.

| Not if there are anti-entropic processes involved, which Schrödinger claimed there are. |

There could be. So now it is your plight to bring up references to show what that could be. And I ask the reader to note how shy Dave seems to be in quoting abstracted papers to support his argument.

What energy is involved in staving off SLOT from the genome? Does an ape hold his genome out in the sun and SLOT is overcome by the input of energy?

Nah, I doubt it. Schrodinger was absolutely correct in that SLOT is overcome in the ATP cycle by taking in energy from food, but what does this have to do with mutating genes? Answer only with references, please.

| Except that James Crow disagrees with your point. |

Reference, please.

| Except that you haven't supported the idea that "deleterious mutation" is a synonym for "disorder," you've just assumed that to be true. |

Um....No, I have shown mathematically entropy rising in the genome and therefore information decreasing.

| Opinions are things thought to be true, but not to such a large extent as a "fact." As such, it's perfectly acceptable to ask for evidence supporting an opinion. You don't hold any opinions you think are false, do you? |

Huh? LMAO

| I would never claim that beneficial mutations could have been prevalent, because they're not, so I don't know why you would ask me to support an opinion I disagree with. |

Then how in the heck could you ever claim that man walked from a protist if the genome did not become more complex from beneficial mutations? Was it magic?

Here are more purely mathematical problems with the equations you've chosen to use:

Since the numerator is always 41471!, W can only vary with the proportion of deleterious mutations to non-deleterious mutations, and will never be below 1.000. The only way for it to get down to 1.000 is if the researchers had found precisely zero deleterious mutations, or if they'd found 41471 of them (because in either case, one of the two factorials in the denominator would be 1, and the whole denominator would then be equal to the numerator). And since W can't be below 1.000, S can never be negative, no matter what the researchers had found, so long as you insist that N1 plus N2 equals some constant (in this case, 41471), because factorials of negative numbers are undefined. Thus, you have ensured that your calculated result will always confirm your hypothesis, regardless of the actual numbers used, since nobody will ever claim that there are no deleterious mutations.

If the researchers had found only 0.00001 deleterious mutations per generation, your entropy would be calculated as 6.72×10-28, or about five orders of magnitude smaller than your number, but you'd still be saying "entropy is increasing." If the researchers had found that half of the nucleotides were deleterious mutations, your calculation results in 1.28×10-18 (five orders of magnitude larger than your result), and you'd still be saying "entropy is increasing."

But the really funny part is that if the researchers had found 41469.6 deleterious mutations per generation, your calculation would result in the exact same "entropy" it does at 1.6 deleterious mutations.

The way you're calculating it, the entropy is maximized at 50% bad mutations, and zero at both 0% and 100%. Fancy that! A genome with 100% deleterious mutations has zero entropy! A genome that's 99.9961% bad mutations has the same entropy as one with 0.0039% (1.6) bad mutations!

More later...

|

Absolutely dumb. Of course it will always be above one because we are observing deleterious mutation and that HAS to show rising entropy. Had they observed a trend of beneficial mutations then the math would have to change.

-S = K log 1/W

You will get negentropy as Schrodinger suggested.

Your dramatic words mean nothing. I showed entropy rising in the human genome, you know it. You don't like it and you you are coming at me with nonsensical guns blazing to refute it. References, please. |

|

|

|

Dave W.

Info Junkie

USA

26036 Posts |

Posted - 01/03/2011 : 20:05:00 [Permalink] Posted - 01/03/2011 : 20:05:00 [Permalink]

|

Originally posted by JerryB

Quit trying to play dumb and pretend that I did not also calculate this using Feynman's method which is pure statistical mechanics that contains no energy/temp. Anyone reading in here can tell you are just being disingenuous. |

No, Feynman's numbers have units on them, also.| Go with Feynman's math if you don't like Boltzmann's. Either way you lose. |

No, because your math is still wrong.| In fact, you are losing this entire debate badly and you are beginning to sense it. Good. |

You are projecting your own failures.| But nobody cares about that. |

Of course you don't care about that. But that was my point, you are simply trying to obfuscate it into extinction. You won't get it done. This is not my first rodeo with you people educated on talk origins. |

You don't show it. You're using creationist arguments which have been debunked for decades.| The input values I used came from a peer reviewed paper on your side. |

So if I pick any two numbers from any peer-reviewed papers, I can calculate some sort of entropy?| Have I yet sent you to a creationist web site? No... |

You don't need to: you pulled two numbers and did math with them. Math that no evolutionary biologist or statistical physicist has ever done before, or would ever do.| ...now you seem to be arguing that your own evolutionary biologists are as full of crap as a Christmas turkey. |

Once again, you are trying to deflect my criticisms of you onto other people.| So my input was your input. |

No, no scientist I'm aware of would ever use nucleotide counts as inputs to a Boltzmann formula, for reasons I've already discussed (and you haven't refuted) already.| Dissing your own people....WOW. |

Would be so if I were doing it, but I'm not.| Not if there are anti-entropic processes involved, which Schrödinger claimed there are. |

There could be. So now it is your plight to bring up references to show what that could be. |

I'll just cite Schrödinger's What is Life, chapter six, which discusses those very processes.| And I ask the reader to note how shy Dave seems to be in quoting abstracted papers to support his argument. |

Your own source supports my argument.What energy is involved in staving off SLOT from the genome? Does an ape hold his genome out in the sun and SLOT is overcome by the input of energy?

Nah, I doubt it. |

Of course you doubt it: you're improperly anthropomorphizing again.| Schrodinger was absolutely correct in that SLOT is overcome in the ATP cycle by taking in energy from food, but what does this have to do with mutating genes? |

You don't think the repair processes for DNA require energy? You don't think sex requires energy? You don't think the cellular processes which maintain and replicate DNA get their energy from cellular metabolism?| Answer only with references, please. |

When you do so, I'll follow suit.| Except that James Crow disagrees with your point. |

Reference, please. |

Go re-read your first James Crow paper, wherein he answers the question of why deleterious mutations haven't caused our extinction, yet. He doesn't use any design argument whatsoever.| Except that you haven't supported the idea that "deleterious mutation" is a synonym for "disorder," you've just assumed that to be true. |

Um....No, I have shown mathematically entropy rising in the genome and therefore information decreasing. |

No, your calculation depends upon deleterious mutations being disorder, so that can't also be its conclusion. That would make your conclusion and your assumption identical, and thus a circular argument.| I would never claim that beneficial mutations could have been prevalent, because they're not, so I don't know why you would ask me to support an opinion I disagree with. |

Then how in the heck could you ever claim that man walked from a protist if the genome did not become more complex from beneficial mutations? |

Evolution isn't driven solely by mutations. When will you stop trying to say that is it?Here are more purely mathematical problems with the equations you've chosen to use:

Since the numerator is always 41471!, W can only vary with the proportion of deleterious mutations to non-deleterious mutations, and will never be below 1.000. The only way for it to get down to 1.000 is if the researchers had found precisely zero deleterious mutations, or if they'd found 41471 of them (because in either case, one of the two factorials in the denominator would be 1, and the whole denominator would then be equal to the numerator). And since W can't be below 1.000, S can never be negative, no matter what the researchers had found, so long as you insist that N1 plus N2 equals some constant (in this case, 41471), because factorials of negative numbers are undefined. Thus, you have ensured that your calculated result will always confirm your hypothesis, regardless of the actual numbers used, since nobody will ever claim that there are no deleterious mutations.

If the researchers had found only 0.00001 deleterious mutations per generation, your entropy would be calculated as 6.72×10-28, or about five orders of magnitude smaller than your number, but you'd still be saying "entropy is increasing." If the researchers had found that half of the nucleotides were deleterious mutations, your calculation results in 1.28×10-18 (five orders of magnitude larger than your result), and you'd still be saying "entropy is increasing."

But the really funny part is that if the researchers had found 41469.6 deleterious mutations per generation, your calculation would result in the exact same "entropy" it does at 1.6 deleterious mutations.

The way you're calculating it, the entropy is maximized at 50% bad mutations, and zero at both 0% and 100%. Fancy that! A genome with 100% deleterious mutations has zero entropy! A genome that's 99.9961% bad mutations has the same entropy as one with 0.0039% (1.6) bad mutations!

More later...

|

Absolutely dumb. Of course it will always be above one... |

Thank you for agreeing that your calculation involves an unfalsifiable hypothesis.| ...because we are observing deleterious mutation and that HAS to show rising entropy. |

Thank you again for insisting in all caps that your hypothesis has no possible falsification, and thus isn't scientific.Had they observed a trend of beneficial mutations then the math would have to change.

-S = K log 1/W

You will get negentropy as Schrodinger suggested. |

Nononono. How utterly stupid. Putting a negative sign in front of the S doesn't change the value of S itself, at all. All you've done is say that K log W = -K log 1/W, which we all know to be true by the definition of logarithm.

And as I pointed out, you would need to change the way you calculate W because you can't put a negative number through the factorial function. If you came up with a calculation for W which could result in any positive value (not just values over 1.0), then S = K log W could result in negative values for S, or "ordering" as you put it. I don't think you can do so, only because you don't actually understand what you're calculating when you calculate W, and so couldn't find a suitable equation if it bit you.

This hypothesis (that you don't understand the math you're using) is further supported by the fact that you failed to even try to respond to the much more serious criticism of your math, that a 100% deleterious mutation rate results in an entropy, by your equation, of zero. According to your math, 41471 bad mutations would result in less "genetic entropy" than 1.6 bad mutations.| Your dramatic words mean nothing. I showed entropy rising in the human genome, you know it. You don't like it and you you are coming at me with nonsensical guns blazing to refute it. |

This is worthless rhetoric, and does nothing to advance your argument. You fail by your own standards.I need references to show that your math is wrong? Wow. Next thing I know, you'll be asking me for citations that 2+2=4.

Here's another problem with your math: in all physics equations, addition or subtraction requires matching units. If the units don't match, you know you're doing something wrong. The first number in your calculation, 41469.6, comes from subtracting deleterious mutations per person per generation from total nucleotides. That's like subtracting 60 MPH from 12 miles. It makes no sense, and invalidates your whole mathematical argument.

Furthermore, entropy is not a "tendency" as you've claimed. Entropy is a measurement of energy unavailable to do work (in thermodynamics) or a measurement of the "number of ways" things can be arranged (Feynman, Shannon). Those are spot measurements, not trends. Where you, Jerry, make the mistake that entropy is a tendency is in the fact that SLOT says that in an isolated system, entropy (S) will tend to increase.

The Boltzmann formula has no Δt or any other such variable in it. It is a spot measurement, it does not reveal tendencies. Inputting a trend value like "deleterious mutations per generation" doesn't magically turn S into ΔS. The Second Law of Thermodynamics discusses ΔS. Boltzmann's formula does not.

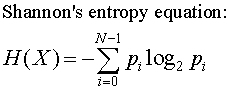

Oh, you also wrote:That's why I cautioned you that we were not discussing Shannon entropy. Get these entropies confused and YOU will be confused.

Boltzmann entropy is a simple formula S = K logW where K is a constant, W is a statistical weight, or the number of ways that things (like genes or atoms) can be arranged and S is the entropy.

Shannon entropy is:

And you are going to come out in bits which is similar, but not the same. We have to be careful to keep our entropies straight. |

That's funny as hell. In statistical mechanics, the entropy of a system not in equilibrium is:

so we can see that the Shannon entropy equation is the exact same equation as the statistical mechanics entropy, with k=1, and a base-2 log instead of natural log (which, since logs to different bases are proportional - ln X = 2.3026 log X, for example - are interconvertible). The equations are only different for convenience of units, not because they're fundamentally different. That's why Shannon said that thermodynamics is just applied information theory. |

- Dave W. (Private Msg, EMail)

Evidently, I rock!

Why not question something for a change?

Visit Dave's Psoriasis Info, too. |

|

|

|

JerryB

Skeptic Friend

279 Posts |

Posted - 01/04/2011 : 05:33:46 [Permalink] Posted - 01/04/2011 : 05:33:46 [Permalink]

|

Originally posted by Dave W.

[No, Feynman's numbers have units on them, also. |

Then go ahead and redo the Feynman math with energy/temp in it. This has to be good.

| So if I pick any two numbers from any peer-reviewed papers, I can calculate some sort of entropy? |

No, and that's a silly question. Here are two numbers: 10 and 50. Now show me an entropic trend in that system and how it would be meaningful.

| You don't need to: you pulled two numbers and did math with them. Math that no evolutionary biologist or statistical physicist has ever done before, or would ever do. |

Well, thank you. You just keep complimenting me for being an innovative voice in science.

And no scientist has ever applied entropy to biological systems or genes, have they:

http://www.newscholars.com/papers/Durston&Chiu%20paper.pdf

| Once again, you are trying to deflect my criticisms of you onto other people. |

Um...no. You keep criticizing my use of THEIR figures and attempting everything you can to make people think that "I" made up those figures and that they did NOT come from a peer-reviewed paper from people on your side. Sorry.

| And as I pointed out, you would need to change the way you calculate W because you can't put a negative number through the factorial function. If you came up with a calculation for W which could result in any positive value (not just values over 1.0), then S = K log W could result in negative values for S, or "ordering" as you put it. I don't think you can do so, only because you don't actually understand what you're calculating when you calculate W, and so couldn't find a suitable equation if it bit you. |

I understand exactly what I'm calculating. You just don't like the results because it upsets your apple cart. And yes, you can put a negative number through the factorial function using the Gamma function. But, since genomes don't order over time as you think they do, we will never have to use the Gamma function.

Of course, we wouldn't even need to do that if a situation did rise. As I told you earlier we can use the positive factorial function. It's obvious that one will know whether they are plugging in deleterious or beneficial mutations. If we are calculating beneficial, just turn the formula around as Schrodinger did: -S = K log1/W. You will show an ordering genome.

| This hypothesis (that you don't understand the math you're using) is further supported by the fact that you failed to even try to respond to the much more serious criticism of your math, that a 100% deleterious mutation rate results in an entropy, by your equation, of zero. According to your math, 41471 bad mutations would result in less "genetic entropy" than 1.6 bad mutations. |

Here you go again, trying to pass off the math from the University of New South Wales, physics department as my math. And there is no such thing as a 100% deleterious mutation rate, the population would be extinct and you wouldn't have anything to calculate. Yup, entropy WOULD be zero.

http://www.phys.unsw.edu.au/PHYS3410/lecture5.html

| I need references to show that your math is wrong? Wow. Next thing I know, you'll be asking me for citations that 2+2=4. |

Yeah, I need some references to show the math wrong. I gave you one to show it correct. Is the inverse asking too much? In fact, you have shown little reference for almost anything you have posited in this discussion.

| Here's another problem with your math: in all physics equations, addition or subtraction requires matching units. If the units don't match, you know you're doing something wrong. The first number in your calculation, 41469.6, comes from subtracting deleterious mutations per person per generation from total nucleotides. That's like subtracting 60 MPH from 12 miles. It makes no sense, and invalidates your whole mathematical argument. |

That's bunk. I added and multiplied mutated nucleotides and total nucleotides. It is assumed in that study that every generation has the same number of nucleotides. But if it makes you feel better, use total substitutions and total nucleotides; you are not going to be any better off.

| Furthermore, entropy is not a "tendency" as you've claimed. Entropy is a measurement of energy unavailable to do work (in thermodynamics) or a measurement of the "number of ways" things can be arranged (Feynman, Shannon). Those are spot measurements, not trends. Where you, Jerry, make the mistake that entropy is a tendency is in the fact that SLOT says that in an isolated system, entropy (S) will tend to increase. |

Wrong again: "Entropy is the tendency for all matter and energy in the universe to evolve toward a state of inert uniformity or total randomness."

http://www.tompotter.us/entropy.html

While it is true that the original definition by Clausius was entropy is energy unavailable to do work, this definition is applicable ONLY in classical thermodynamics and we are not studying heat, are we.

SLOT: With every spontaneous reaction entropy will tend to increase. Do you know what a spontaneous reaction is in chemistry?

Spontaneous reactions are exothermic reactions. They release heat and therefore increase entropy. But are all reactions exothermic? No. Some are endothermic and absorb heat. These lower entropy. This is why SLOT is a tendency. And this should be common sense to you.

| The Boltzmann formula has no Δt or any other such variable in it. It is a spot measurement, it does not reveal tendencies. Inputting a trend value like "deleterious mutations per generation" doesn't magically turn S into ΔS. The Second Law of Thermodynamics discusses ΔS. Boltzmann's formula does not. |

It is not a spot measure when the data you plug into it is not a spot measure and is an average of a trend per generation over 6 million years. You are still harping on this? .....And again, you are climbing back into the 1800s and into the Classical Thermodynamic era when little was known in thermodynamics regarding statistical mechanics.

If you want a delta, just change formulas: deltaS = deltaQ/T works nicely for heat exchanges, but we are not talking heat exchanges.

Of course. we can still show change in statistical entropy via S = K logW taking two or more calculations and plugging them into the formula deltaS = S2 - S1. No big whoop and quite simple thermodynamics.

Glad you found it entertaining. I'm laughing my ass off at this entire post.

In statistical mechanics, the entropy of a system not in equilibrium is:

so we can see that the Shannon entropy equation is the exact same equation as the statistical mechanics entropy, with k=1, and a base-2 log instead of natural log (which, since logs to different bases are proportional - ln X = 2.3026 log X, for example - are interconvertible). The equations are only different for convenience of units, not because they're fundamentally different. That's why Shannon said that thermodynamics is just applied information theory.

|

And I'll bet if I take a shovel and dig for two hours, I might uncover a point somewhere in that diatribe? |

| Edited by - JerryB on 01/04/2011 07:52:27 |

|

|

|

Fripp

SFN Regular

USA

727 Posts |

Posted - 01/04/2011 : 07:51:13 [Permalink] Posted - 01/04/2011 : 07:51:13 [Permalink]

|

Originally posted by JerryB

In fact, you are losing this entire debate badly and you are beginning to sense it. Good.

|

Sorry Jerry, but as one who is reading these posts, it is you who is getting his ass handed to him on a plate. It is your posts that are tedious. I am sure that I am not alone in this assessment. But I am also sure that you will claim that it is us who are close-minded and suffering from "groupthink" and that only you have discovered THE TRUTH.

But feel free to proclaim victory without a shred of evidence. It wouldn't be the first thing you claim unsupported by evidence. |

"What the hell is an Aluminum Falcon?"

"Oh, I'm sorry. I thought my Dark Lord of the Sith could protect a small thermal exhaust port that's only 2-meters wide! That thing wasn't even fully paid off yet! You have any idea what this is going to do to my credit?!?!"

"What? Oh, oh, 'just rebuild it'? Oh, real [bleep]ing original. And who's gonna give me a loan, jackhole? You? You got an ATM on that torso LiteBrite?" |

|

|

|

JerryB

Skeptic Friend

279 Posts |

Posted - 01/04/2011 : 07:57:44 [Permalink] Posted - 01/04/2011 : 07:57:44 [Permalink]

|

Originally posted by Fripp

Originally posted by JerryB

In fact, you are losing this entire debate badly and you are beginning to sense it. Good.

|

Sorry Jerry, but as one who is reading these posts, it is you who is getting his ass handed to him on a plate. It is your posts that are tedious. I am sure that I am not alone in this assessment. But I am also sure that you will claim that it is us who are close-minded and suffering from "groupthink" and that only you have discovered THE TRUTH.

But feel free to proclaim victory without a shred of evidence. It wouldn't be the first thing you claim unsupported by evidence.

|

He's struggling and if he doesn't overcome the math, he's lost it. Note that in the last post he is making a last ditch attempt to do exactly that.

I'm not claiming victory just yet. But if I ever do, will I expect you observers to admit it? Nah. I know that will never happen because it would upset your belief system and most will go to any length to avoid that, if possible.

Anyhow, I'm sure my posts are tedious since I am the only one arguing this side. I apologize for that. But the only alternative would be not to answer the posts point by point. |

| Edited by - JerryB on 01/04/2011 08:25:12 |

|

|

|

|

|

|

![]()